Over the past decade, near-infrared reflectance spectrometers (NIRS) have gone from the lab to the field and can now fit into the palm of your hand. But how do these systems work, and why is the calibration such an important consideration? How can the output be incorporated into your decision-making?

While this assumption is advantageous, there are some caveats. Although we cannot see near-infrared light, it obeys the same physical laws as visible light. Consequently, not only does the chemical makeup of the sample influence the light measured but also the physical properties. For example, have you ever misjudged the color of an object because its surface finish affected how the light was scattered or reflected? Additionally, the interaction and overlapping absorption of light by chemicals makes prediction of the chemical makeup of a sample even more challenging.

Calibration is key

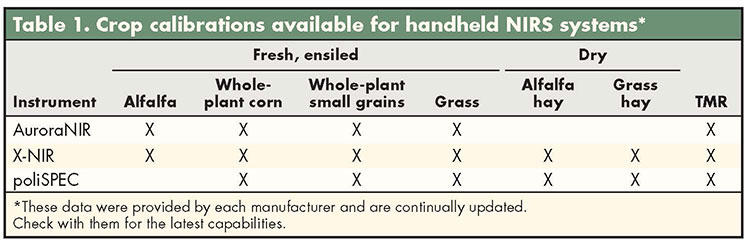

Managing variability is the job of the spectrometer’s calibration, and consequently, the calibration is the most important part of the NIRS method. Table 1 depicts the various calibrations available for current hand-held commercial systems.

You’ll note that a separate calibration is utilized for each crop species and for fresh or ensiled crops. This is because the manufacturer has determined that calibration performance can be improved by this separation. In calibration development, it is important to either control the variability so that it doesn’t influence the calibration, or include it so that the calibration can account for it.

The results obtained from these hand-held NIRS units probably won’t be quite as accurate as those from a commercial testing lab. Recall our discussion about controlling variability. The NIRS forage testing laboratories work tirelessly to not only dry (water is a strong absorber of near-infrared light) and grind your forage samples but also to maintain their instruments and calibrations. The laboratories also have implemented protocols to monitor their instrument’s performance on a daily basis. The calibrations used at many laboratories are maintained by a consortium of laboratories that monitor calibration performance and share samples to improve prediction accuracy.

Furthermore, the reference method used to calibrate your NIRS may be different than the one used by the forage-testing laboratory. It’s worth asking the manufacturer of the NIRS system to provide their reference methods before investing, especially if you plan to use the instrument in conjunction with periodic laboratory testing — a good practice in my opinion.

On-farm NIRS does have some advantages over laboratory NIRS. Results are rapid and can be readily incorporated into management decisions. Additionally, you can scan more samples to ensure that you’re working with analysis from a more representative sample, to assess variability in your feedstuffs, and to make decisions based on historical trends in analysis.

Monitor forage variability

Published accuracies will help you determine how predictions from your handheld NIR unit could be used in assessing the variability in your feeding program. For example, let’s consider a producer who would like to use a handheld NIR spectrometer to manage dry matter in corn silage. The producer considers that a manufacturer reports an accuracy of plus or minus 1.5 percent of dry matter.

What does that exactly mean?

It isn’t always clear and typically requires a discussion with the instrument provider. However, generally it means that if you predict a sample of corn silage that’s 35 percent dry matter, the actual dry matter content is between 33.5 percent and 36.5 percent.

How much does dry matter vary in your forages?

I think it’s safe to say that it’s more than plus or minus 1.5 percent, and consequently these instruments have utility in managing ration dry matter. Several studies in the U.S. and Europe support this conclusion.

What about managing dry matter in other feed ingredients?

First, a separate calibration for that ingredient would need to exist. Next, you would compare the variability of the ingredient with the accuracy of the instrument. For example, a 2015 study of compositional variability of feed ingredients indicated that soybean meal dry matter varied 1.9 percent. If the dry matter prediction accuracy of the NIR spectrometer for soybean meal was plus or minus 1.5 percent, then that system would have little utility in managing soybean meal moisture. Accordingly, one must consider the economics of managing soybean meal based on dry matter.

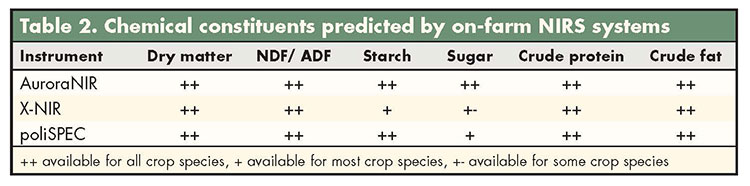

This process should be extended to each and every chemical constituent that your operation would like to predict and manage (for example, neutral detergent fiber, starch, and crude protein), keeping in mind that NIRS is particularly sensitive to organic molecules.

I hope this article sheds some light (pun intended) on the utility of and the growing number of options that producers have for in-hand forage analysis with NIRS.

This article appeared in the April/May 2018 issue of Hay & Forage Grower on page 32 and 33.

Not a subscriber? Click to get the print magazine